Chapter 9 Miscellaneous Topics

model selection and conditional likelihood

variance/bias trade off

error propagation

MCMC?

N-mixture ideas

phylogenetic and life history/trait stuff

PIF methods

These are just reminders, to be deleted later

You can label chapter and section titles using {#label} after them, e.g., we can reference Chapter 1. If you do not manually label them, there will be automatic labels anyway.

Figures and tables with captions will be placed in figure and table environments, respectively.

par(mar = c(4, 4, .1, .1))

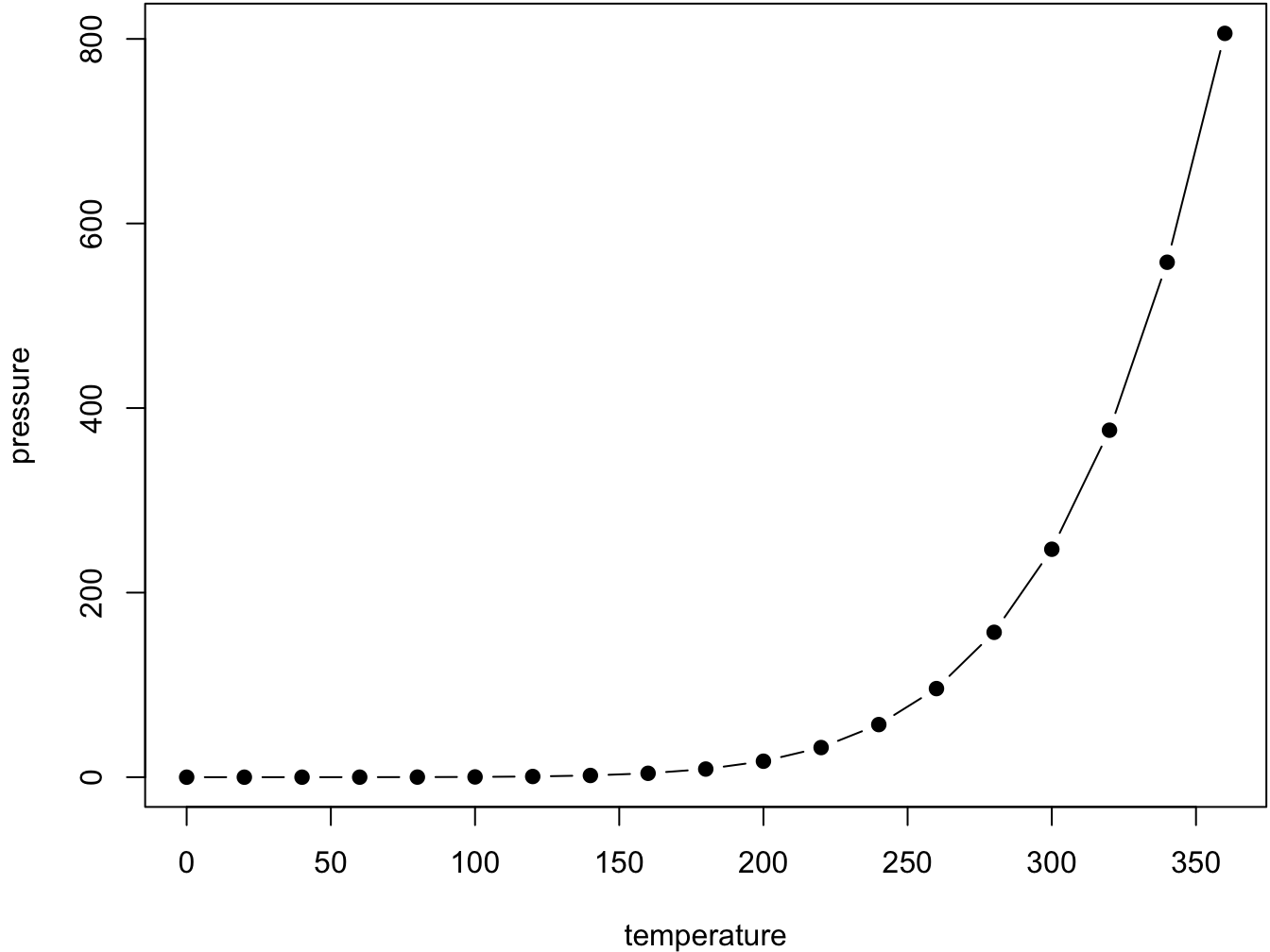

plot(pressure, type = 'b', pch = 19)

Figure 9.1: Here is a nice figure!

Reference a figure by its code chunk label with the fig: prefix, e.g., see Figure 9.1. Similarly, you can reference tables generated from knitr::kable(), e.g., see Table 9.1.

knitr::kable(

head(iris, 20), caption = 'Here is a nice table!',

booktabs = TRUE

)| Sepal.Length | Sepal.Width | Petal.Length | Petal.Width | Species |

|---|---|---|---|---|

| 5.1 | 3.5 | 1.4 | 0.2 | setosa |

| 4.9 | 3.0 | 1.4 | 0.2 | setosa |

| 4.7 | 3.2 | 1.3 | 0.2 | setosa |

| 4.6 | 3.1 | 1.5 | 0.2 | setosa |

| 5.0 | 3.6 | 1.4 | 0.2 | setosa |

| 5.4 | 3.9 | 1.7 | 0.4 | setosa |

| 4.6 | 3.4 | 1.4 | 0.3 | setosa |

| 5.0 | 3.4 | 1.5 | 0.2 | setosa |

| 4.4 | 2.9 | 1.4 | 0.2 | setosa |

| 4.9 | 3.1 | 1.5 | 0.1 | setosa |

| 5.4 | 3.7 | 1.5 | 0.2 | setosa |

| 4.8 | 3.4 | 1.6 | 0.2 | setosa |

| 4.8 | 3.0 | 1.4 | 0.1 | setosa |

| 4.3 | 3.0 | 1.1 | 0.1 | setosa |

| 5.8 | 4.0 | 1.2 | 0.2 | setosa |

| 5.7 | 4.4 | 1.5 | 0.4 | setosa |

| 5.4 | 3.9 | 1.3 | 0.4 | setosa |

| 5.1 | 3.5 | 1.4 | 0.3 | setosa |

| 5.7 | 3.8 | 1.7 | 0.3 | setosa |

| 5.1 | 3.8 | 1.5 | 0.3 | setosa |

9.1 Binomial model and censoring

Try cloglog with a rare species, like BOCH

#spp <- "OVEN" # which species

spp <- "BOCH" # which species

#spp <- "CAWA" # which species

x <- data.frame(

josm$surveys,

y=as.numeric(ytot[rownames(x), spp]))

x$y01 <- ifelse(x$y > 0, 1, 0)

table(x$y)

mP <- glm(y ~ Decid * ConifWet, x, family=poisson)

mBc <- glm(y01 ~ Decid * ConifWet, x, family=binomial("cloglog"))

mBl <- glm(y01 ~ Decid * ConifWet, x, family=binomial("logit"))

coef(mP)

coef(mBc)

coef(mBl)

plot(fitted(mBc) ~ fitted(mP), col=4,

ylim=c(0, max(fitted(mP))), xlim=c(0, max(fitted(mP))))

points(exp(model.matrix(mBc) %*% coef(mBc)) ~ fitted(mP), col=2)

abline(0,1)9.2 Optimal partitioning

oc <- opticut(as.matrix(ytot) ~ 1, strata = x$HAB, dist="poisson")

plot(oc)9.3 Optilevels

When we have categorical or compositional (when e.g. proportions add up to 1, also called

the unit sum constraint) data,

we often want to simplify and merge classes or add up columns.

We can do this based on the structural understanding of these land cover classes

(call all treed classes Forest, like what we did for FOR, WET and AHF).

Alternatively, we can let the data (the birds) tell us how to merge the classes. The algorithm does the following:

- fit model with all classes,

- order estimates for each class from smallest to largest,

- merge classes that are near each others, 2 at a time, moving from smallest to largest,

- compare \(\Delta\)AIC or \(\Delta\)BIC values for the merged models and pick the smallest,

- treat this best merged model as an input in step 1 and star over until \(\Delta\) is negative (no improvement).

Here is the code for simplifying categories using the opticut::optilevels function:

M <- model.matrix(~HAB-1, x)

colnames(M) <- levels(x$HAB)

ol1 <- optilevels(x$y, M, dist="poisson")

sort(exp(coef(bestmodel(ol1))))

## estimates

exp(ol1$coef)

## optimal classification

ol1$rank

data.frame(combined_levels=ol1$levels[[length(ol1$levels)]])Here is the code for simplifying compositional data:

ol2 <- optilevels(x$y, x[,cn], dist="poisson")

sort(exp(coef(bestmodel(ol2))))

## estimates

exp(ol2$coef)

## optimal classification

ol2$rank

head(groupSums(as.matrix(x[,cn]), 2, ol2$levels[[length(ol2$levels)]]))9.4 N-mixture models

9.5 Estimating abundance

Exponential model, bSims data

Note: $visits is not yet exposed

set.seed(1)

phi <- 0.5

Den <- 1

l <- bsims_init()

a <- bsims_populate(l, density=Den)

b <- bsims_animate(a, vocal_rate=phi)

tint <- 1:5

(tr <- bsims_transcribe(b, tint=tint))Multiple-visit stuff for bSims: the counting of new individuals resets for each interval (also: needs equal intervals)

tr$visits

v <- get_events(b, vocal_only=TRUE)

v <- v[v$t <= max(tint),]

v1 <- v[!duplicated(v$i),]

tmp <- v1

tmp$o <- seq_len(nrow(v1))

plot(o ~ t, tmp, type="n", ylab="Individuals",

main="Vocalization events",

ylim=c(1, nrow(b$nests)), xlim=c(0,max(tint)))

for (i in tmp$o) {

tmp2 <- v[v$i == v1$i[i],]

lines(c(tmp2$t[1], max(tint)), c(i,i), col="grey")

points(tmp2$t, rep(i, nrow(tmp2)), cex=0.5)

points(tmp2$t[1], i, pch=19, cex=0.5)

}

plot(o ~ t, tmp, type="n", ylab="Individuals",

main="Vocalization events",

ylim=c(1, nrow(b$nests)), xlim=c(0,max(tint)))

for (j in seq_along(tint)) {

ii <- if (j == 1)

c(0, tint[j]) else c(tint[j-1], tint[j])

vv <- v[v$t > ii[1] & v$t <= ii[2],]

tmp <- vv[!duplicated(vv$i),]

tmp$o <- seq_len(nrow(tmp))

if (nrow(tmp)) {

for (i in tmp$o) {

tmp2 <- vv[vv$i == tmp$i[i],]

lines(c(tmp2$t[1], ii[2]), c(i,i), col="grey")

points(tmp2$t, rep(i, nrow(tmp2)), cex=0.5)

points(tmp2$t[1], i, pch=19, cex=0.5)

}

}

}

library(unmarked)

f <- function() {

a <- bsims_populate(l, density=Den)

b <- bsims_animate(a, vocal_rate=phi, move_rate=0)

tr <- bsims_transcribe(b, tint=tint)

drop(tr$visits)

}

Den <- 0.01

#ymx <- tr$visits

(ymx <- t(replicate(10, f())))

## highly dependent on K when Den is higher

nmix <- pcount(~1 ~1, unmarkedFramePCount(y=ymx), K=1000)

coef(nmix)

plogis(coef(nmix)[2])

exp(coef(nmix)[1])

Den * 100## distance function density

# check http://seankross.com/notes/dpqr/

need rmax for rdist

.g <- function(d, tau, b=2, hazard=FALSE)

if (hazard)

1-exp(-(d/tau)^-b) else exp(-(d/tau)^b)

.f <- function(d, tau, b=2, hazard=FALSE)

.g(d, tau, b, hazard) * 2*d

# this is g*h / integral

ddist <- function(x, tau, b=1, hazard=FALSE, rmax=Inf, log = FALSE){

V <- integrate(.f, lower=0, upper=rmax,

tau=tau, b=b, hazard=hazard)$value

out <- .f(x, tau=tau, b=b, hazard=hazard) / V

out[x > rmax] <- 0

if (log)

log(out) else out

}

pdist <- function(q, tau, b=1, hazard=FALSE, lower.tail = TRUE, log.p = FALSE){}

qdist <- function(p, tau, b=1, hazard=FALSE, lower.tail = TRUE, log.p = FALSE){}

#

# make output vector

# loop over out and accept reject based on weights as ddist

# can do in batches: star with n, continue with rejected n, etc

rdist <- function(n, tau, b=1, hazard=FALSE, rmax=Inf){

q99 <- uniroot(function(d) ddist(d))

}

tau <- 2.3

V <- integrate(.f, lower=0, upper=Inf, tau=tau)$value

d <- runif(10^4, 0, 10)

d <- d[rbinom(10^4, 1, .f(d, tau)/V) > 0]

hist(d)

nll <- function(tau) {

-sum(ddist(d, tau, log=TRUE))

}

optimize(nll, c(0, 100))

# once done: generate distances with rdist, and fit model with ddist

# see if AIC etc works as expectedBarker, Nicole K. S., Patricia C. Fontaine, Steve G. Cumming, Diana Stralberg, Alana Westwood, Erin M. Bayne, Péter Sólymos, Fiona K. A. Schmiegelow, Samantha J. Song, and D. J. Rugg. 2015. “Ecological Monitoring Through Harmonizing Existing Data: Lessons from the Boreal Avian Modelling Project.” Wildlife Society Bulletin 39: 480–87. https://doi.org/10.1650/CONDOR-14-108.1.

Mahon, C. Lisa, Gillian Holloway, Péter Sólymos, Steve G. Cumming, Erin M. Bayne, Fiona K. A. Schmiegelow, and Samantha J. Song. 2016. “Community Structure and Niche Characteristics of Upland and Lowland Western Boreal Birds at Multiple Spatial Scales.” Forest Ecology and Management 361: 99–116. https://doi.org/10.1016/j.foreco.2015.11.007.

Matsuoka, S. M., C. L. Mahon, C. M. Handel, Péter Sólymos, E. M. Bayne, P. C. Fontaine, and C. J. Ralph. 2014. “Reviving Common Standards in Point-Count Surveys for Broad Inference Across Studies.” Condor 116: 599–608. https://doi.org/10.1650/CONDOR-14-108.1.